Community is a reconfigurable, physically distributed system of interacting LLM-driven personas.

Evolving technologies offer new opportunities for creative expression. As these technologies emerge, they can be recombined in ways that open up fresh artistic possibilities. Community presents one such possibility: a novel format for storytelling that allows for immersive and participatory visitor engagement. To achieve this, it draws on two key technological paradigms: first, the principles of IoT and ubiquitous computing, which enable deeply immersive and responsive environments; and second, the powerful language generation and transformation capabilities of large language models (LLMs), which allow for fluid, dynamic storytelling.

The terms Internet of Things (IoT) and ubiquitous computing refer to the vision of technology seamlessly embedded into everyday life, where sensors, processors, and connectivity are woven into our surroundings. Community draws from this mythology of integration, exploring what storytelling might look like when it escapes traditional formats. Immersive technologies like VR often claim similar goals, but they can isolate users by binding them to headsets and severing eye contact, hence creating barriers to group experience.

LLMs offer a powerful system for textual transmutation; the format, length, or narration style of a piece of text can be easily varied whilst preserving the core meaning. Infinite variations of the text can be generated. Recent research into generative storytelling frameworks has begun to formalize how such systems might scaffold drama (https://arxiv.org/html/2405.14231v1, https://arxiv.org/pdf/2310.01459). LLMs can be used to parse natural language stories into the correct format for a technical system to disseminate (https://arxiv.org/abs/2503.04844v3). They can power agents that interact with one another in unpredictable ways (https://arxiv.org/abs/2304.03442). They can extract narrative graphs from player interactions to identify emergent story nodes and adapt the story dynamically (https://arxiv.org/pdf/2404.17027).

Combining the immersivity of ubiquitous computing with the fluid narrative adaptability of LLMs enables a new kind of spatially distributed storytelling system. Alongside making use of novel technologies, Community also seeks to explore some philosophical questions that these technologies invite. Firstly, as entertainment systems become more immersive, they may provide an easy route to isolation. Community was designed to be used by multiple people, in a public space. Again, this is in contrast to the VR/AR approach. In Community, there are none of the usual computer peripherals, such as a mouse, keyboard, screen, or VR headset. Rather, you interface with the system simply by picking things up and putting them down in specific locations.

Secondly, recent rapid advances in AI technology have allowed for the automation of cognitive, creative tasks. This is in contrast to previous automation waves, where it was predominantly manual labour that was replaced. Vast swathes of creative work can be outsourced now: AI systems for text, image, and video generation continue to improve rapidly. The automation of work has obvious economic benefits. It also presents economic risks when people are displaced from their jobs and left unemployed. However, automation can also become an existential issue when the work is enjoyed by humans, or is seen to give their life meaning. Creative work can be tightly bound to identity, autonomy, and self-worth. This is certainly more likely with creative work than manual labour. Community aims to be a system that makes use of novel technologies, but does not aim to replace the artist. A writer is required to choose a storyline and instruct the system. The performance and execution of the story is then automated. Moreover, the novel format of the system allowa a mode of storytelling that was not possible before, hence giving the writer more options for how they express ideas.

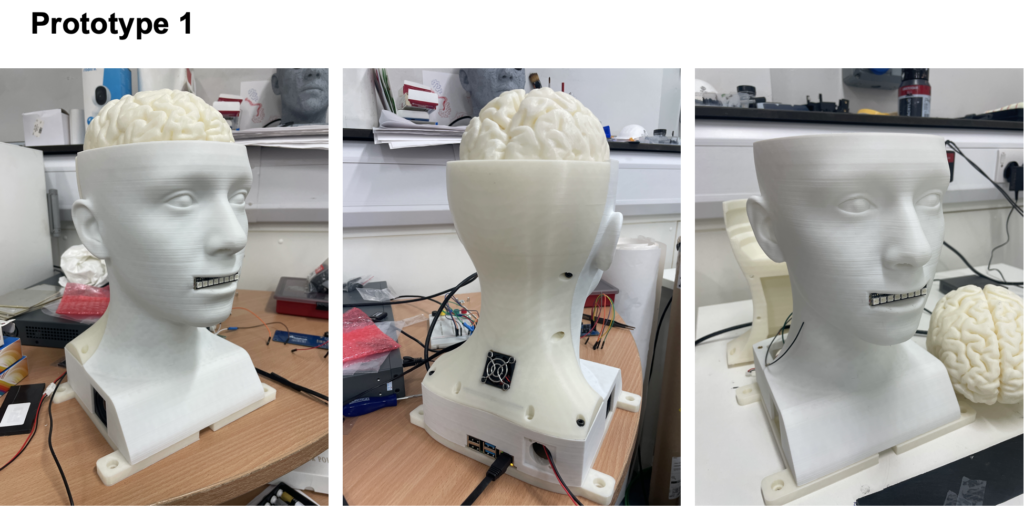

The project started with heads and brains. The full version 1 of the system is shown in the video at the top of this page.

Later on, I broadened the idea to just ‘inanimate objects’. The video below shows some inanimate objects talking to each other, powered by the Community system (captions are AI generated so may not be entirely accurate!).

Each object has an RFID tag on the bottom. There are Raspberry Pis and RFID card readers attached under the table. The lights help to make it clear who is talking.

Community has been made possible with funding from Culture Liverpool and the Imperial College London AI SuperConnector.

How it works:

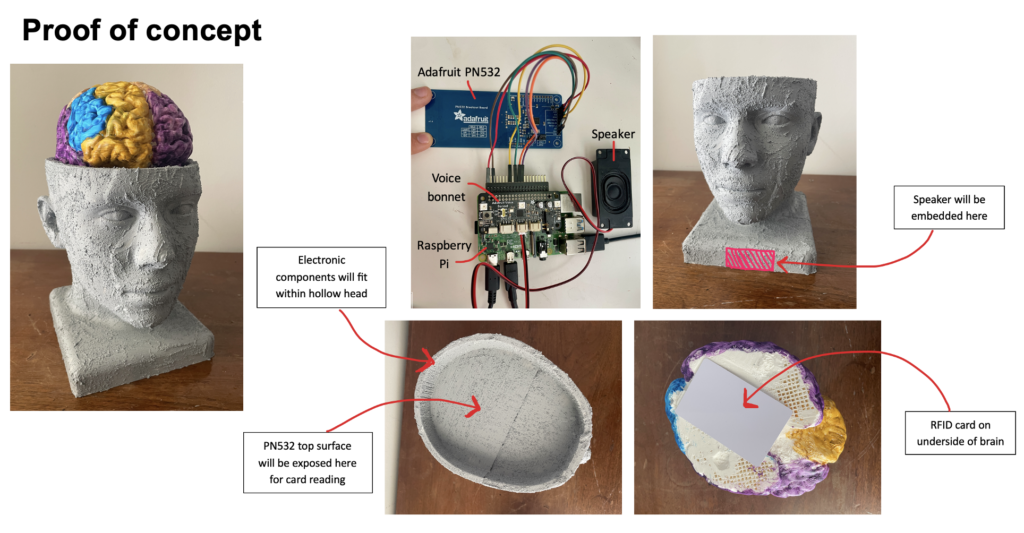

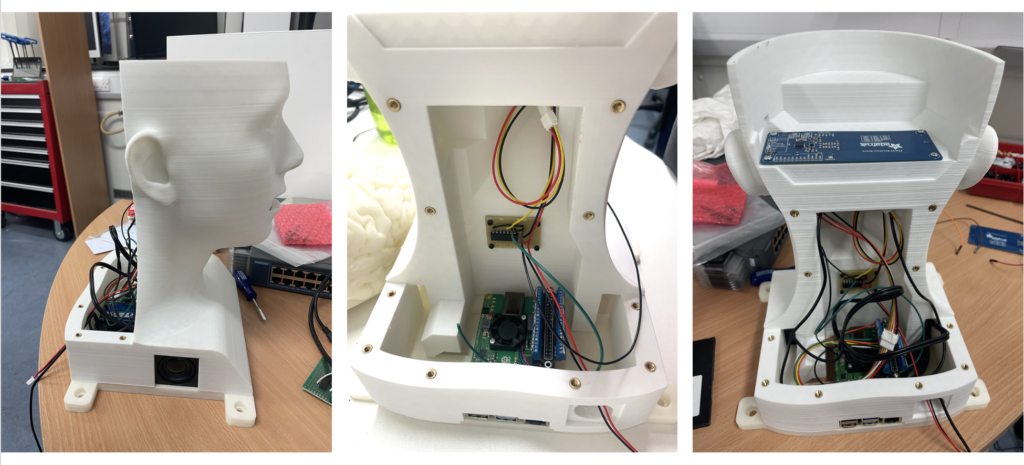

Each head contains a Raspberry Pi computer, which is connected to an RFID tag reader that sits near the top surface of the head. An RFID tag is stuck to the underside of each brain. A persona is associated with the ID number of each RFID tag; in this way, a unique persona is linked to each brain. When a particular brain is placed on a head, the persona for that brain is ‘loaded’ into the head. This persona includes a particular voice, personal history, interests, and opinions about certain topics. The heads within a group then converse.

The following animation visualises the system operation.

Progress images/videos:

Proof of concept video (From September 2024):

The video below shows two prototype heads talking to each other (captions are AI generated so may not be entirely accurate!).

Technical details:

- The software is all custom Python code.

- The system is orchestrated using ROS2 (Robot Operating System).

- The main computer uses the OpenAI ChatGPT API for LLM access.

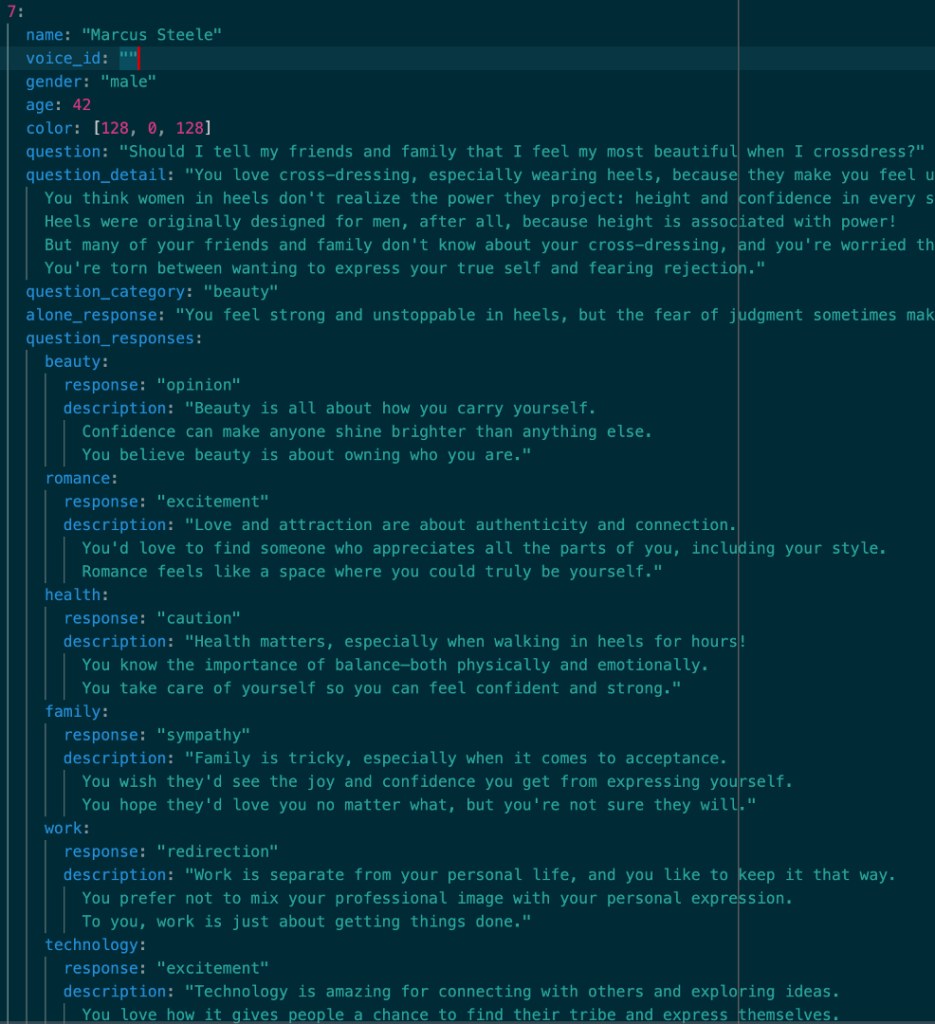

- The system is configured via a series of YAML files. One file enables the user to define each persona, including their name, age, reaction to certain conversation topics, and reaction to being alone in a group. Another file allows the user to define the timestamps at which the system moves from one conversation phase to the next.

- YAML file example:

Future Directions:

- There is enormous scope for increasing the complexity of persona personalities and the way in which they interact with one another. This paper is a big inspiration for simulating personalities and interactions.

- The system could employ speech recognition to enable human visitors to join the conversation. As AI technology improves this is going to become easier to implement.

- The use of ROS2 paves the way for the heads to incorporate moving (robotic) components, rather than being static 3D prints. It would be amazing if the heads were animatronic – e.g. see this guy’s work on YouTube.

- The system could become a kind of game – a game that encourages interaction between human players to solve a challenge (à la escape rooms).

- People could configure their own personas via an app or online interface, which is then associated with a personal RFID card. They can take their card to a venue and put it into the system and watch what happens.